An example of this imperative to make things better is Choosing Wisely. This is an initiative launched in 2012 in which the American Board of Internal Medicine (ABIM) Foundation challenged specialty societies to produce lists of tests and interventions that doctors in their specialty routinely use but that are not supported by evidence. The explicit goal of Choosing Wisely was to identify and promote care that is (1) supported by evidence; (2) not duplicative of other tests or procedures already received; (3) free from harm; and (4) truly necessary. In response to this challenge, medical specialty societies asked their members to “choose wisely” by identifying tests or procedures commonly used in their field whose necessity should be questioned and discussed. The resulting lists of “Things Providers and Patients Should Question” was designed to spark discussion about the need—or lack thereof—for many frequently ordered tests or treatments.

Let’s make no mistake here. Choosing Wisely was a big deal and remains so, which is why we here at SBM have blogged about various initiatives several times. Specialty societies have produced dozens of lists of interventions (usually in groups of five) that are commonly used but not supported by evidence. The biggest cancer specialty society, the American Society of Clinical Oncology (ASCO), produced a list for which two of the items involved my specialty, breast cancer (more on that later). Some of the lists have been stellar; some have been relatively self-serving. For instance, while I applaud the American College of Medical Toxicology and The American Academy of Clinical Toxicology for rejecting the use of homeopathic medications, “provoked” urine heavy metal tests (where the patient receives a chelating agent before the urine test, thus guaranteeing high levels of metals in the urine), and the like, the choice of Choosing Wisely metrics seemed a bit self-serving to me in that it avoided the likely far more common misuse and overuse of more conventional laboratory tests.

Be that as it may, now that Choosing Wisely has been around over three years, it’s natural to want to ask: Is it working? Has the initiative had an effect on the use and overuse of tests and interventions that don’t benefit patients? This is a difficult question to answer, given the large number of Choosing Wisely lists, and, given how long it takes to do studies, three years is really not enough time for a full assessment. It is, however, sufficient time to get some early indications. Last week, there was a major study published in JAMA Internal Medicine that suggests the answer to that question is, for the most part: Not yet. Indeed, Sanjay Gupta, MD characterized the results as “disappointing.”

Choosing Wisely: Not affecting practice that much thus far

Let’s go to the tape, as I like to put it, and examine the study, by Rosenberg et al., “Early Trends Among Seven Recommendations From the Choosing Wisely Campaign.” Now, it must be pointed out right off the bat that this is a study done by an insurance company. The investigators are all affiliated with Anthem, and led by Alan Rosenberg, MD, the firm’s vice president of clinical pharmacy and medical policy. Anthem, of course, is not a disinterested party in that, were Choosing Wisely recommendations to result in widespread change in medical practice, it would likely save the company millions, if not billions, of dollars. None of this is to denigrate the study; it’s actually a decent attempt to assess the impact of Choosing Wisely. The reason to mention who did the study is mainly because it affected study design. Basically, of all the interventions initially included in 2012 Choosing Wisely lists, these seven interventions were chosen because their usage could be analyzed via commercial insurance claims data using the International Classification of Diseases, Ninth Revision; Current Procedural Terminology codes; and whenever appropriate, National Drug Codes and Logical Observation Identifiers Names and Codes. The seven measures were also chosen to represent a wide variety of Choosing Wisely recommendations:

- imaging tests for headache with uncomplicated conditions

- cardiac imaging for members without a history of cardiac conditions

- preoperative chest x-rays with unremarkable history and physical examination results

- low back pain imaging for members without red-flag conditions

- human papillomavirus (HPV) testing for women younger than 30 years

- antibiotics for acute sinusitis

- prescription nonsteroidal anti-inflammatory drugs (NSAIDs) for members with select chronic conditions (hypertension, heart failure, or chronic kidney disease).

You can read the details yourself for these recommendations, including the rationale for each of them, in a table provided in the paper, which is not behind a paywall.

The next part of the analysis was relatively straightforward conceptually, if not methodologically, and that was to analyze the usage of these measures in the target population listed in the Choosing Wisely guidelines before and after the publication of Choosing Wisely in the second quarter of 2012. The number and percentage of members with claims for low-value services for each recommendation were assessed quarterly for at least ten quarter through the third quarter of 2013, with the starting point for assessing each measure ranging from 2010 through 2011, based on data availability. In the case of one measure (cardiac imaging), the investigators had to use a random sample for their analysis because the numbers were too large.

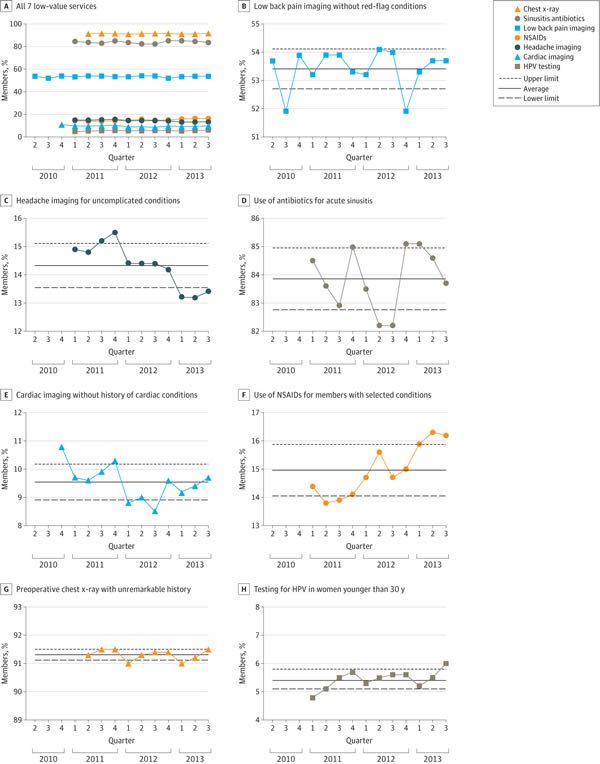

So what were the results? A picture is worth a thousand words, as they say, and here’s the money figure:

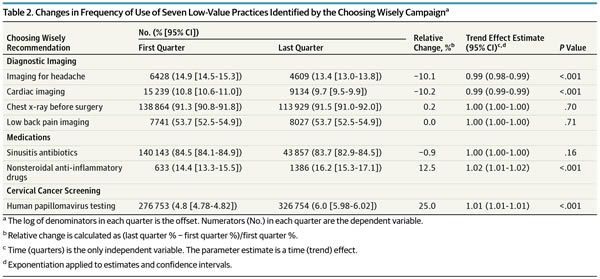

And a table shows exactly which interventions changed and by how much:

As you can see, of the seven low-value services analyzed, there were modest, but statistically significant decreases for two of them (imaging for uncomplicated headache, 10.1%; and cardiac imaging in low risk patients, 10.2%). However, as the authors note, although these declines were statistically significant, the effect sizes were modest to marginal and “may not represent clinically significant changes.” Think of it this way: On an absolute basis, the decline was only 1.5% for imaging for headache and 1.1% for cardiac imaging in low-risk patients.

In contrast, the frequency of the most highly used services either remained stable, as in the case of preoperative chest x-rays and low back pain imaging. The use of antibiotics for sinusitis also remained essentially stable. Of note, this is an intervention received by over 80% of the target population, which is particularly depressing, as it indicates that the vast majority of patients with uncomplicated sinusitis who don’t need antibiotics are getting antibiotics and that Choosing Wisely has done pretty much nothing to change this, at least in the population insured by Anthem. Also depressingly, two of the interventions targeted by Choosing Wisely actually increased significantly during the study period, the use of prescription NSAIDs for members with select chronic conditions and HPV testing for women younger than 30 years, which increased by 12.5% and 25%, respectively, during the study period.

This study is not without limitations, the main one being the same limitations as any study based on administrative claims data. For instance, studies based on claims data often do not adequately capture the clinical circumstances leading to the ordering of a service or test, which means that some “inappropriate” usages might actually, taken in more fuller context, be appropriate for individual patients. It is also very difficult to separate trends due to other factors from temporal changes measured by quarterly sampling. The authors note, for instance:

It is likely that several factors in addition to the Choosing Wisely campaign may have been responsible for changes or lack of changes in frequency of low-value services. The first is secular trends and preexisting patterns. For example, during the study period, guidelines from different organizations regarding Papanicolaou testing in young women converged around the notion that those younger than 21 years should not get Papanicolaou tests. This might explain the relatively rare use of Papanicolaou tests. At the same time, HPV testing became more widely available in the marketplace and its use increased over time.

Indeed, the marketplace and availability of tests are often huge factors in driving the use of tests. Another factor to consider is that this is a rather specific population: Patients with commercial insurance through a large health insurance company. There could conceivably be differences, potentially even large ones, if, for instance, Medicare or Medicaid claims were studied. Even so, the best that can be said about the results of this study is that, taking the most generous interpretation of the data possible, Choosing Wisely has had a marginal effect on the use of only two of seven guidelines and appears to have had no effect at all on the other five. Indeed, the authors note:

Frequency of the most highly used services either remained stable (preoperative chest x-rays and low back pain imaging) or decreased slightly (sinusitis antibiotics) during the study period, which underscores the view that simple publication of recommendations—such as the Choosing Wisely lists—is insufficient to produce major changes to practice.

That’s because change is hard, and more has to be done besides just putting information out there.

Choosing Wisely and quality improvement

I don’t believe I’ve discussed this before, but I have personal experience in evaluating adherence to Choosing Wisely guidelines. The reason is that I am the co-director of a statewide collaborative quality initiative (CQI) for breast cancer care, and we chose to examine adherence to one of the ASCO Choosing Wisely guidelines, specifically: Don’t perform PET, CT and radionuclide bone scans in the staging of early breast cancer at low risk for metastasis. Basically, this guideline recommends against the routine use of advanced imaging (PET, CT, and bone scans) to stage women with clinical stage I or II breast cancer because the yield is so low that the vast majority of “positive” findings will be false positives and result in delays in care and possibly harm due to additional tests, including invasive biopsies.

Working with our director and team to decrease the use of these tests in women with early stage breast cancer has been an eye-opening experience for me. I can’t go into too much detail because of my position and because our results haven’t been published yet, but I can discuss a couple of issues somewhat generally. For example, although our CQI has a large database with many data elements meticulously entered, it has proven far more difficult than I would ever have guessed to accurately capture scans of this sort that might be considered inappropriate under Choosing Wisely. The reason is that advanced scans are not considered inappropriate in women with early stage cancers who have symptoms suggestive of metastases, and frequently in the database the existence of such symptoms doesn’t show up in an automated search, necessitating a chart review to determine whether imaging was appropriate in a given case or not. It took us a long time to develop a process (far longer than I would have expected) to make sure that clinically indicated scans under this Choosing Wisely measure were not being inappropriately flagged as low value. This is just the sort of issue that a study like Anthem’s would miss, because it does take a chart review to identify clinical aspects that would move a patient out of the population targeted by Choosing Wisely measures. Then there was another issue that never would have occurred to me, namely how to exclude scans ordered for other indications. To say that I’ve learned a lot about how difficult putting the “rubber to the road” and implementing measures like Choosing Wisely is an understatement.

Our CQI’s experience is similar to what’s been published in the literature. For example, Hahn et al. examined the use of advanced imaging in two western HMOs and found that about 15% of early stage breast cancer patients received at least one advanced imaging test, with wide intraregional variability in testing rates. In a study of Canadian oncologists Simos et al. found recently that:

The majority of breast cancer doctors are aware of and generally agree that guidelines pertaining to staging imaging for early breast cancer are reflective of evidence. Despite this, adherence is variable and factors such as local practice patterns and disease biology may play a role. Alternative strategies, beyond simply publishing recommendations, are therefore required if there is to be a sustained change in doctor behaviour.

Changing practice is hard, even when the physicians agree that a guideline is evidence-based. This difficulty is supported by another study by Simos et al., who compared rates of use of advanced imaging in early stage breast cancer pre- and post-Choosing Wisely and found that there was no reduction in advanced imaging after publication of Choosing Wisely measures and noted, “Broader knowledge translation strategies beyond publication are needed if recommendations are to be implemented into routine clinical practice.”

Quite true.

Evidence is not enough: Change, even evidence-based change, is hard

As I’ve learned from personal experience and studying the literature, implementing evidence-based change is not easy. There are a number of barriers to changing practice, not the least of which is sheer, old-fashioned inertia. Practitioners don’t like to change because change is uncomfortable. That’s why, whenever I point out how medicine changes in response to evidence, I also point out that this change is often slower and messier than we would like. There are also incentives, particularly financial, for ordering low-value tests and treatments. Hospitals make money when CT scans are ordered, for instance. These issues are acknowledged in an accompanying editorial by David H. Howard and Cary P. Gross, but they also point out an important issue:

High-quality evidence is critical for identifying and discouraging the use of low-value care. In the absence of this evidence, health care system leaders and administrators may have difficulty convincing front-line physicians to change practice patterns. Frequently, however, such evidence is lacking. Many of the interventions cited in the Choosing Wisely campaign were deemed low value precisely because they have not been tested in trials. For example, the American Academy of Neurology recommended against carotid endarterectomy for patients with asymptomatic carotid stenosis because no recent randomized clinical trials have compared carotid endarterectomy with medical management.2 Observational studies are useful in identifying potentially low-value services, but trials that ensure the comparability of the treatment and control groups through random assignment are the best approach to convincingly determine whether a service is low value.

Although it is not feasible to conduct a trial of every service mentioned in the Choosing Wisely recommendations, the lack of high-quality evidence will hamper efforts to reduce low-value care. Clinical training increasingly focuses on the use of evidence to guide patient management. Clinicians are more likely to respond to a recommendation to avoid a low-value treatment if the recommendation is based on sound evidence than if it is justified on the grounds that the treatment has never been proven to be superior to another treatment. The absence of evidence is not the same as evidence of ineffectiveness.

I couldn’t help but notice a parallel in this argument to CAM, in that saying a CAM treatment has no evidence of effectiveness is has less of an effect on physicians than saying that a CAM treatment has evidence of ineffectiveness. Actually, that’s probably not true, given how CAM advocates are now invoking the power of placebo as an explanation of how CAM treatments “work.” But I digress. I’ll stop.

The above point, however, is an issue. As I like to say at times, part of the reason why comparative effectiveness research—and, let’s face it, that’s exactly what we’re talking about here—is so unattractive to many clinical investigators is that it doesn’t test anything new, and we like to test new things. Testing old things is, to some degree, about as exciting as watching paint dry. Until recently, it wasn’t particularly well funded, either, because there is little incentive if there is only a downside, a risk that the trial will produce a negative result that will discourage use of a company’s product. Moreover, as the authors note, patients and physicians tend to be reluctant to participate in trials of interventions already in practice for which they have strong prior belief of efficacy.

Noting the variable history of physicians changing their practice based on negative clinical trial results, ranging from results that had little effect on practice to results that had an immediate effect on practice, such as arthroscopic surgery in patients with osteoarthritis, epidermal growth factor receptor inhibitors in metastatic colorectal cancer, and percutaneous coronary intervention for patients with stable angina, the authors make a proposal:

We propose that research funders and other interested parties, such as the National Institutes of Health, the Patient-Centered Outcomes Research Institute (PCORI), the Agency for Healthcare Research and Quality, large payers, and patient advocacy groups, undertake a major initiative to identify and fund randomized clinical trials of costly and established but untested treatments. Studies that test the equivalence (or noninferiority) of treatments with markedly different costs should be prioritized based on the potential effect of their findings on health care spending. When the costs of various treatments or approaches to practice are unknown, studies should include costs as an end point. Although other randomized clinical trials aim to identify treatments with superior outcomes, value-based research would answer the question, “Can we achieve equivalent outcomes at a lower cost?” In addition, implementation science can shed light on which trials have an effect on clinical practice and identify mechanisms to increase the effect of new evidence. Developing an infrastructure for conducting these types of trials will become even more important if the US Congress passes the 21st Century Cures Act,10 which will lower the barriers to US Food and Drug Administration approval of certain medical devices and drugs. If enacted, it is likely that even more treatments will enter practice with limited evidence of effectiveness. Information from postapproval studies will be even more important than it is today.

I found it satisfying that Howard and Gross basically agree with me about the 21st Century Cures Act’s likelihood of allowing medical devices and drugs with limited evidence of effectiveness being approved by the FDA. That aside, the key to improving science-based standards of care is to provide the rigorous evidence.

Where I disagree with Howard and Gross is in their seeming assumption that high-quality evidence for or against a procedure is almost enough. As I’ve learned in my own experience in writing for this blog, that is only the starting point. Systems need to be constructed to monitor practice and compare it to science-based guidelines, and mechanisms designed to encourage cessation of low-value tests and interventions and the adoption of tests and interventions supported by evidence. Then there’s the part that’s the hardest of all: Testing whether making these changes results in improvement in outcomes, which is just as hard, if hot harder, than setting up the system. In another accompanying editorial, Ralph Gonzalez describes the difficulties and what needs to be done to change. Key among these efforts is a realignment of incentives is:

To actually reduce wasteful medical practices, delivery systems and clinician groups must (1) accept and commit to the Choosing Wisely challenge and (2) develop and implement strategies that make it easier for clinicians to follow Choosing Wisely recommendations.

A better alignment of incentives is critical for getting delivery system leaders to commit to the Choosing Wisely campaign. In a fee-for-service system, most delivery systems continue to get paid for tests and drugs, regardless of their appropriateness or indication. Payers are able to pass on these costs to employers and patients, creating a vicious cycle. Some possibilities for breaking this cycle include intense pressure or demand from consumers (eg, through continued grassroots efforts from consumer groups involved in the campaign), new regulations such as from the Centers for Medicare & Medicaid Services or the Joint Commission that require monitoring and reductions in unnecessary medical practices, and/or required external reporting of benchmarks tied to the Choosing Wisely recommendations.

Therein lies the challenge. You can’t change practice if you don’t know what practice is. That’s a major value of CQIs, measuring what is being done and comparing it to the best clinical guidelines. Generating the high quality evidence necessary to construct guidelines and translating that evidence into change will require a sustained effort against. As much as physicians want to do the right thing for their patients, changing behavior in large numbers of people is among the most difficult human endeavors of all.